ChatGPT energy usage is 0.34 Wh per query

Now we have a number for AI energy consumption, what can we use it for? What else do we need to know for it to be useful?

In a June 10 post on his personal blog, OpenAI CEO Sam Altman wrote:

People are often curious about how much energy a ChatGPT query uses; the average query uses about 0.34 watt-hours, about what an oven would use in a little over one second, or a high-efficiency lightbulb would use in a couple of minutes. It also uses about 0.000085 gallons of water; roughly one fifteenth of a teaspoon.

This is several orders of magnitude less than most estimates we’ve seen in the media (mainstream and academic). Now we have a real number, perhaps the overestimates of AI energy and water consumption will stop?

What’s missing?

Although it’s good to have a number to compare to estimates, there are plenty of things missing from this quote. To use this number meaningfully, we need context:

What is an average query? One exchange? A full conversation thread? What happens if you trigger a query of ChatGPT’s search index vs its search bot that goes out to websites in real time?

How does multi-modal affect this? What is the impact of analyzing a PDF or generating an image?

Is energy from supporting systems (storage, networking, cooling) included?

Which model is being measured? GPT-3.5? GPT-4o? Some are far more compute-intensive than others.

Is this just for inference? Does it consider the resources required for training?

Which year is this for? Is it an estimate over all usage for that time period? How has it changed over the years?

Water usage varies by location. Is this a global average, or specific to a data center region?

Is this “water consumption” direct (evaporative cooling) or indirect (e.g., hydroelectric power generation)?

What about carbon? With a specific location we can better understand the electricity mix and create carbon footprint estimates.

What does it mean for future AI energy usage?

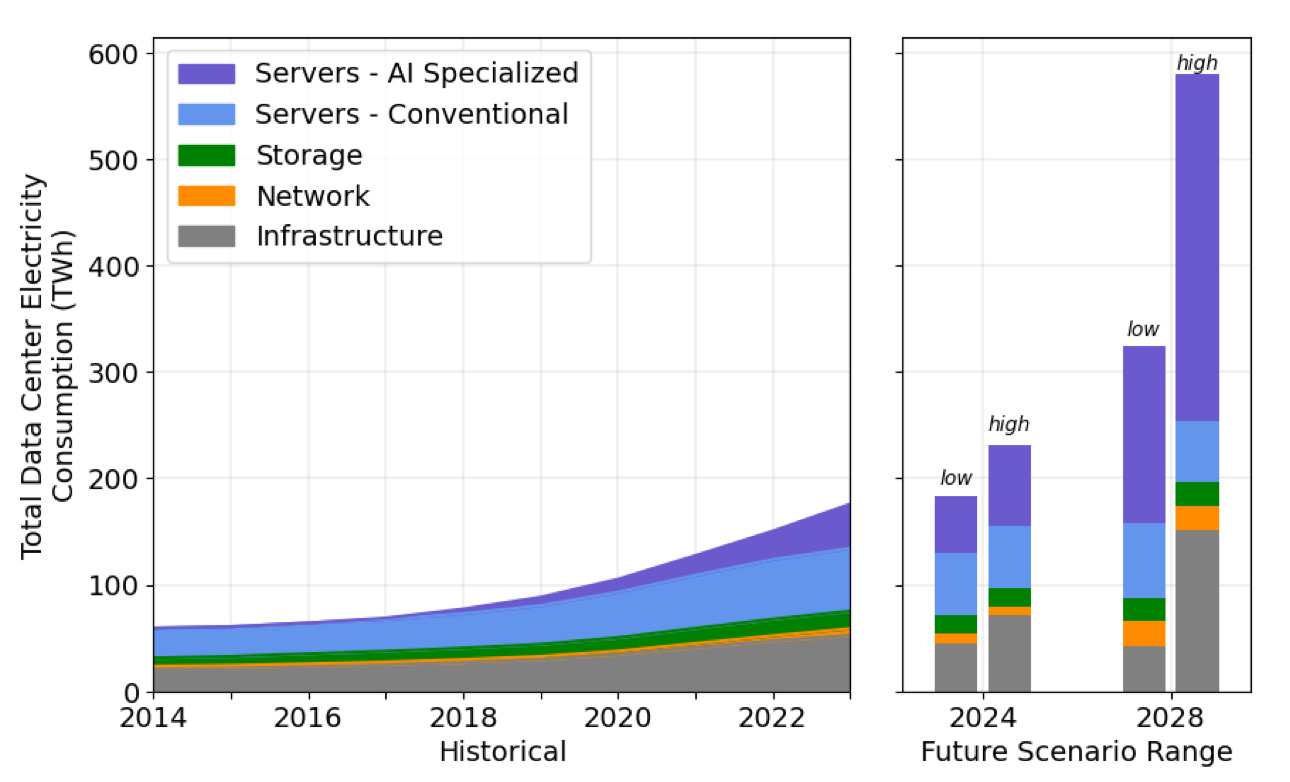

Energy intensity shows per-query impact. But it says nothing about total energy use, which depends on scale. Over the last decade we’ve seen significant improvements in IT energy efficiency which has meant that total data center energy consumption has grown only slightly even with major expansion in usage.

I expect to see continuing improvements in the energy efficiency of AI, but the total energy consumption is likely to increase because of the huge usage AI is seeing. This is shown in the 2024 US Data Center Energy Report estimates of 325 to 580 TWh (6.7% to 12% of total electricity consumption) in the US by 2028, mostly driven by AI.

The puritan movement has moved on from the environment impact of video streaming because AI is the new trendy thing to attack. The major players like OpenAI haven’t helped themselves by remaining so secretive about their energy and water footprint, but this is a small step in the right direction. I hope we’ll see more official metrics (not just a blog post from the CEO) and details on some of the open questions above!

Isn't it fair to say that "ChatGPT claims that it's energy usage is 0.34 Wh per query"?

I've seen some lively debates about the accuracy of this claim.